DiffusionMTL

Learning Multi-Task Denoising Diffusion Model from Partially Annotated Data

CVPR 2024

Abstract

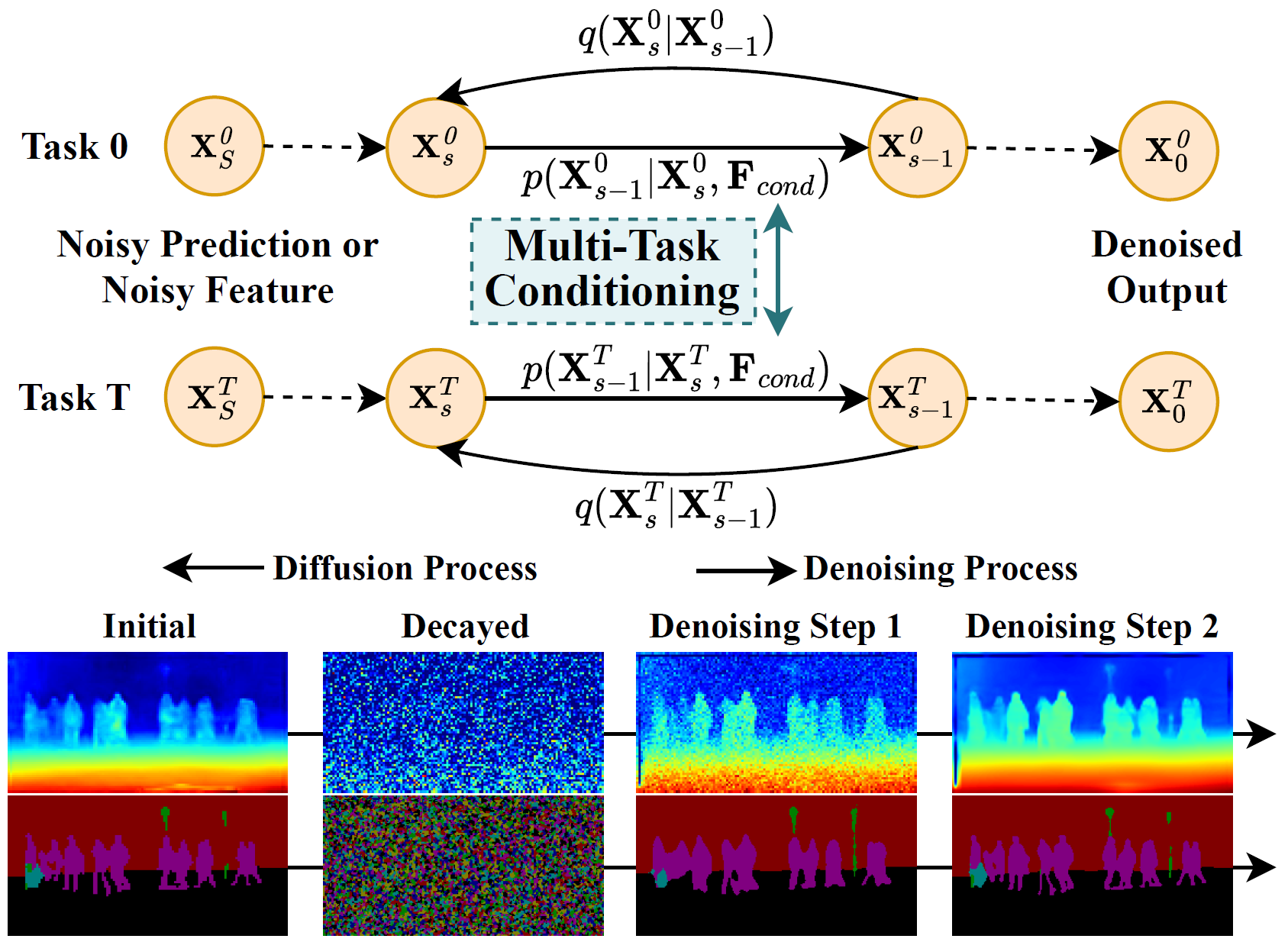

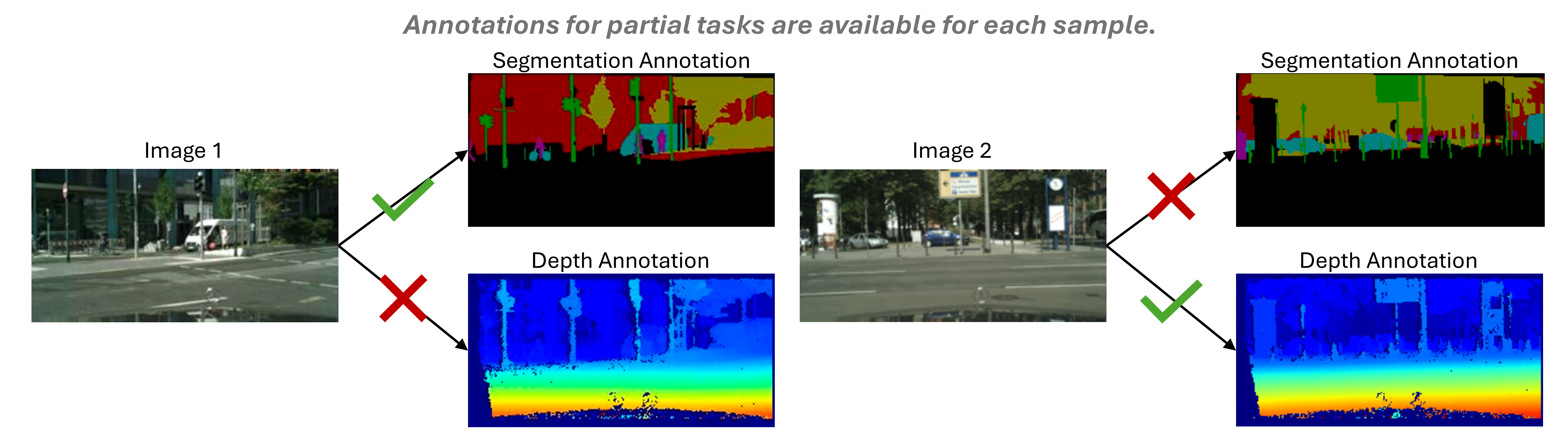

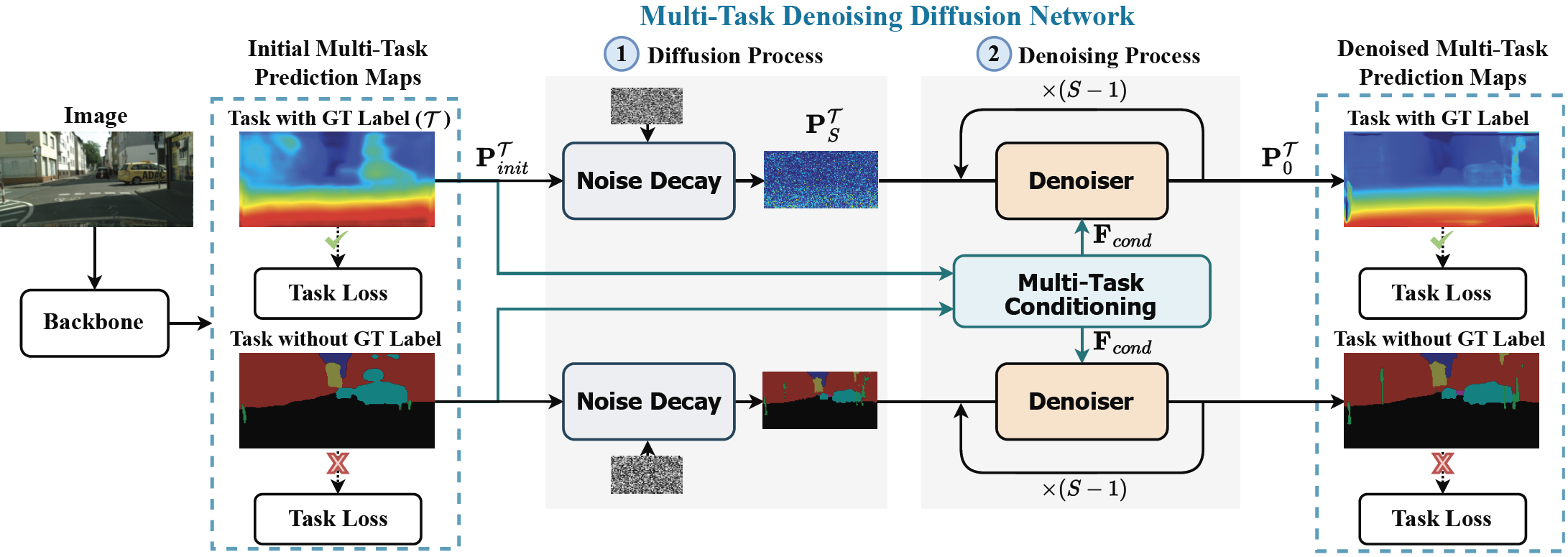

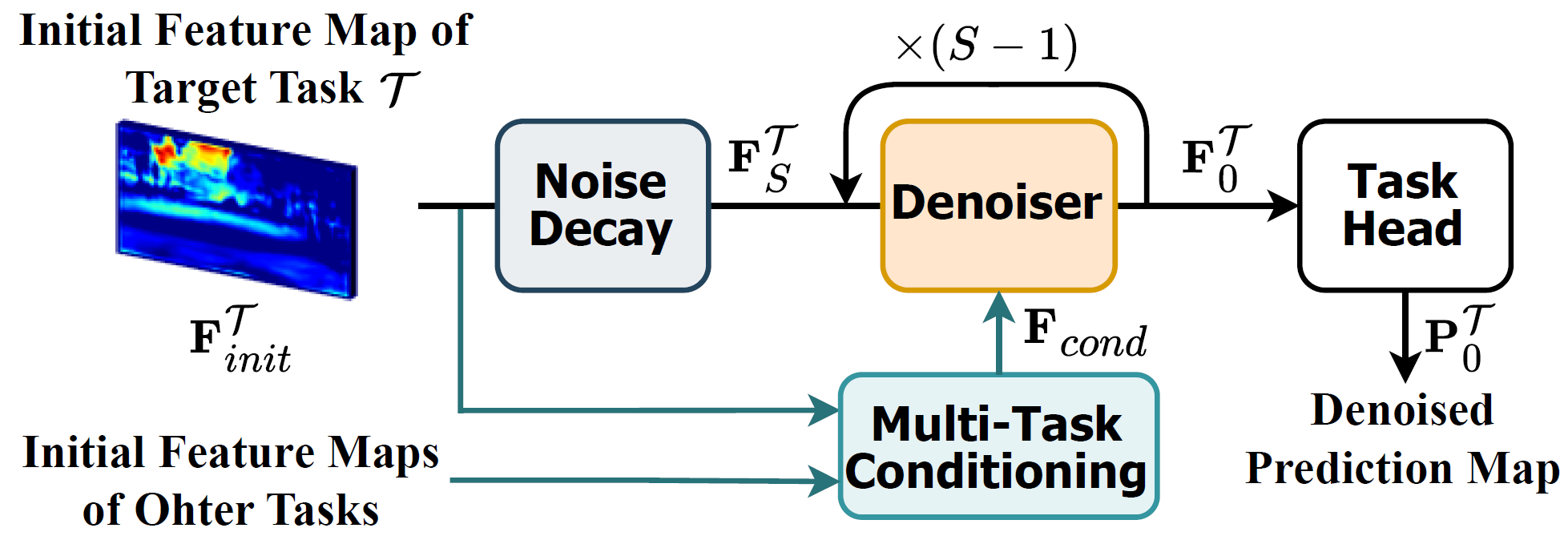

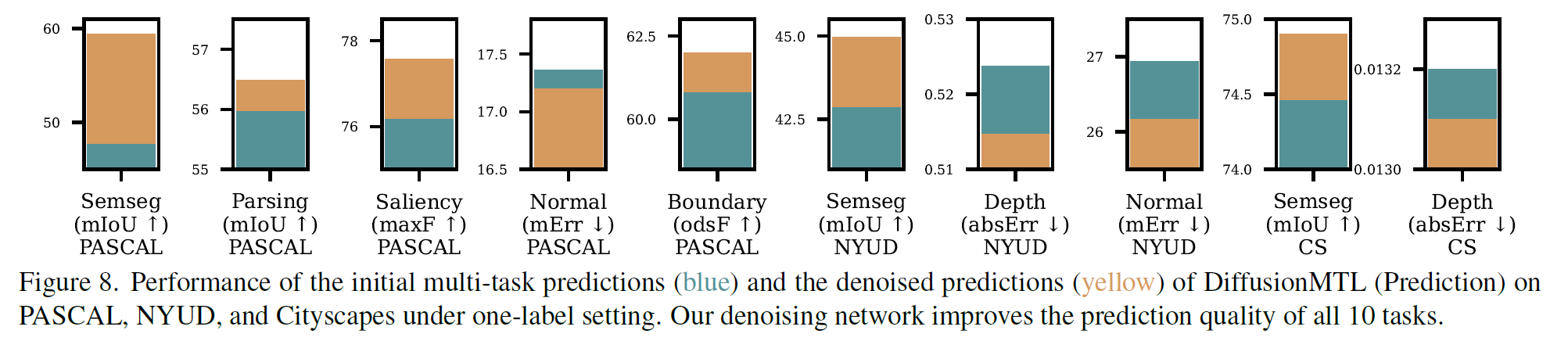

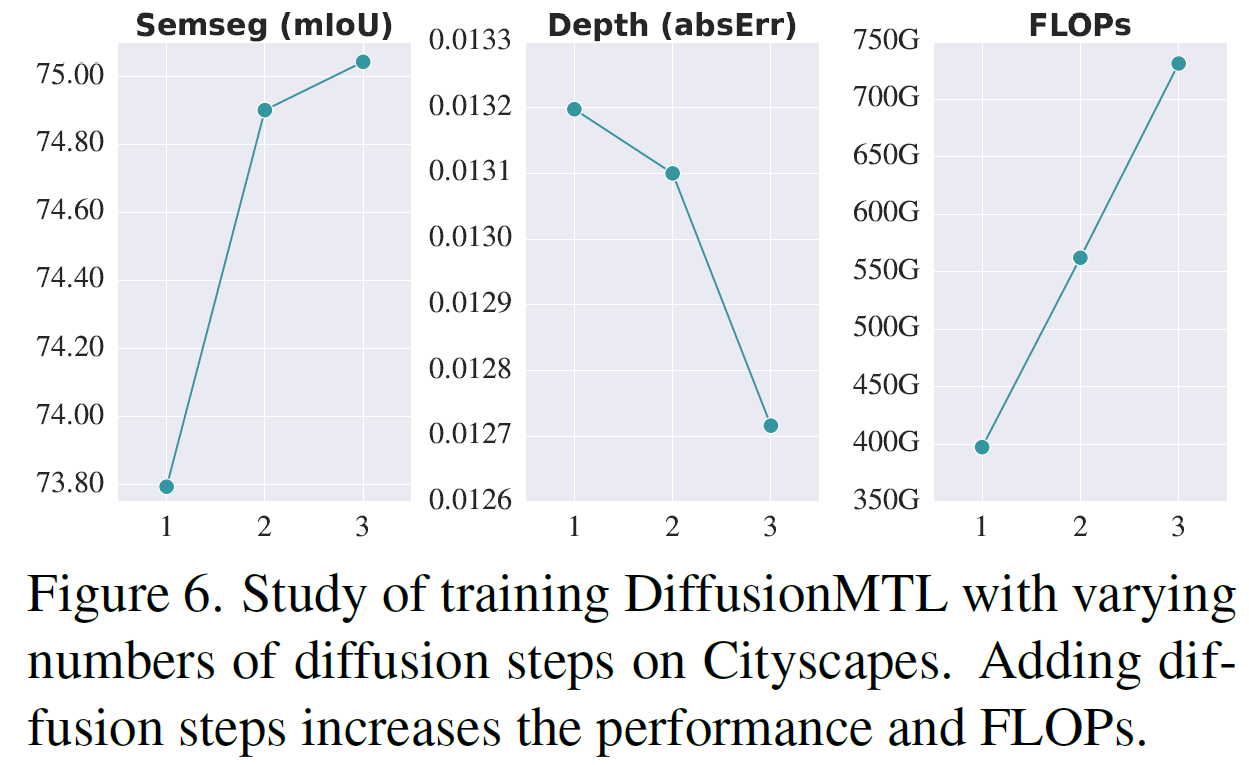

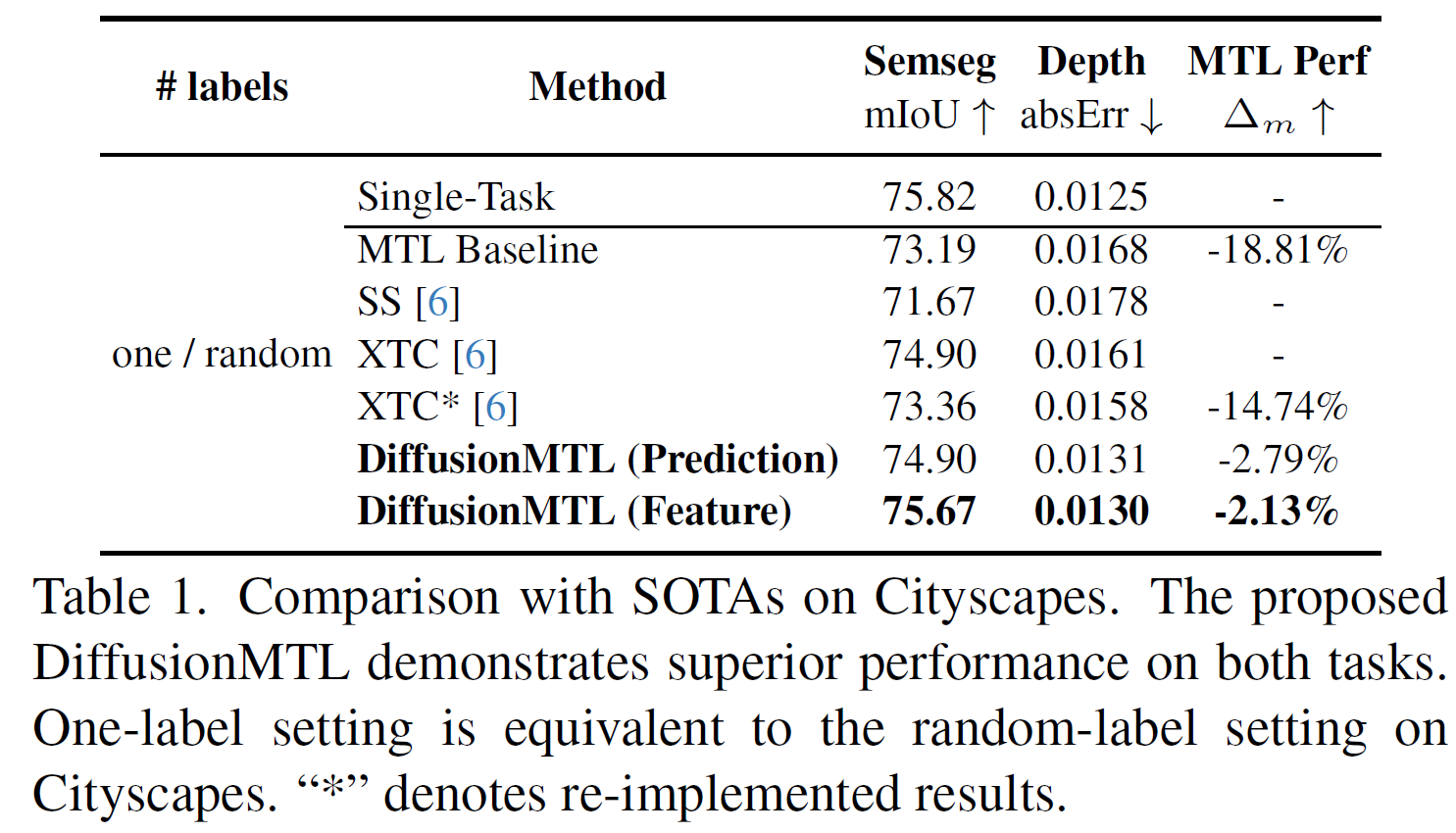

Recently, there has been an increased interest in the practical problem of learning multiple dense scene understanding tasks from partially annotated data, where each training sample is only labeled for a subset of the tasks. The missing of task labels in training leads to low-quality and noisy predictions, as can be observed from state-of-the-art methods. To tackle this issue, we reformulate the partially-labeled multi-task dense prediction as a pixel-level denoising problem, and propose a novel multi-task denoising diffusion framework coined as DiffusionMTL. It designs a joint diffusion and denoising paradigm to model a potential noisy distribution in the task prediction or feature maps and generate rectified outputs for different tasks. To exploit multi-task consistency in denoising, we further introduce a Multi-Task Conditioning strategy, which can implicitly utilize the complementary nature of the tasks to help learn the unlabeled tasks, leading to an improvement in the denoising performance of the different tasks. Extensive quantitative and qualitative experiments demonstrate that the proposed multi-task denoising diffusion model can significantly improve multi-task prediction maps, and outperform the state-of-the-art methods on three challenging multi-task benchmarks, under two different partial-labeling evaluation settings.

Framework

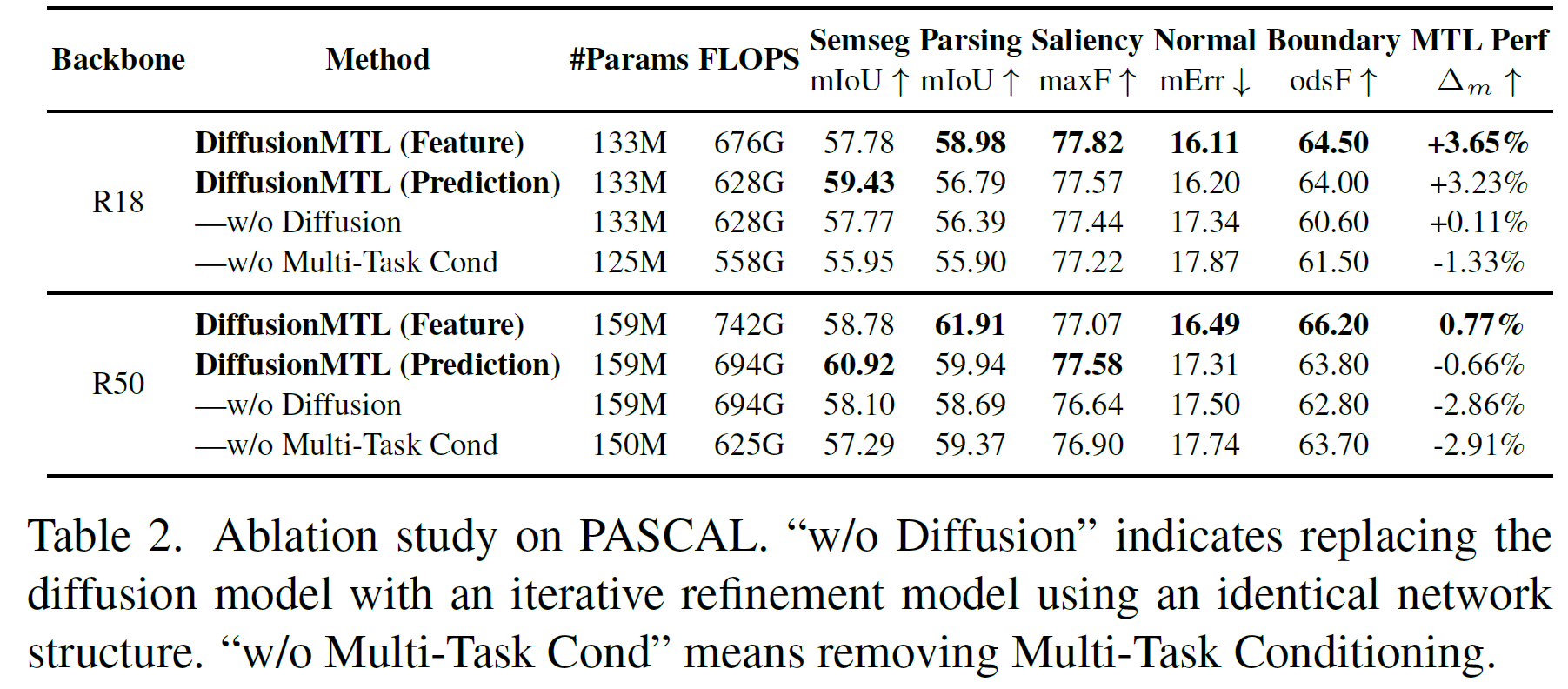

Experiments

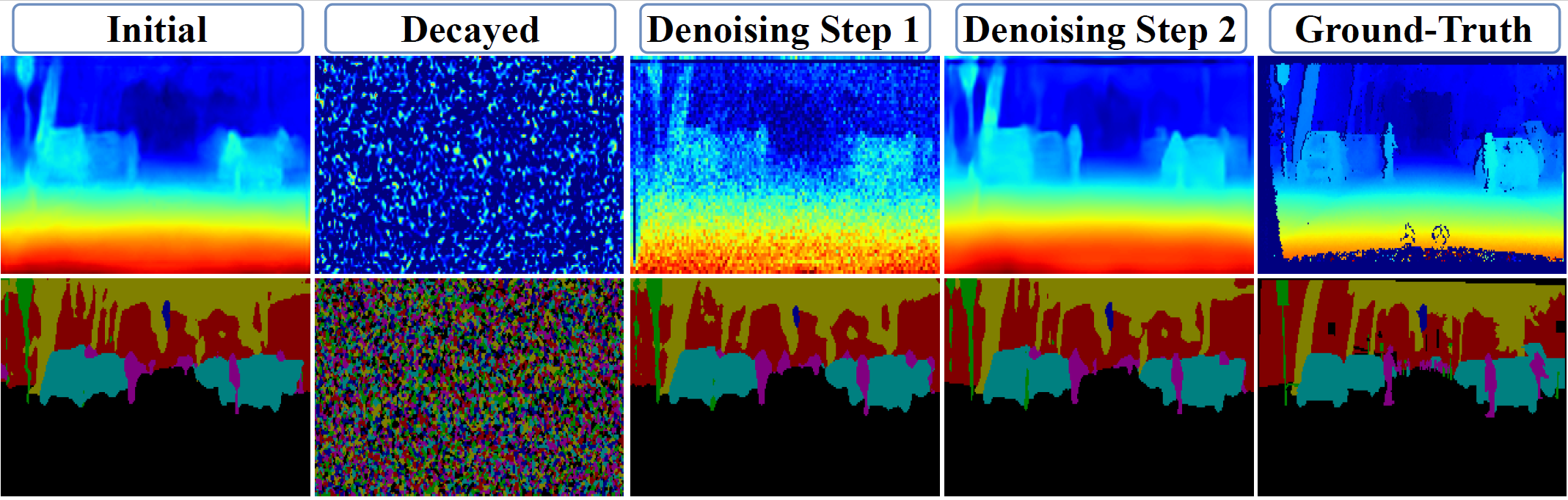

Our DiffusionMTL is able to rectify noisy input and generate clean prediction maps. The model used in this comparison is trained on the Cityscapes dataset under the one-label MTPSL setting.

BibTeX

@InProceedings{diffusionmtl,

title={DiffusionMTL: Learning Multi-Task Denoising Diffusion Model from Partially Annotated Data},

author={Ye, Hanrong and Xu, Dan},

booktitle={CVPR},

year={2024}

}